- Published on

How to Use StreamMultiDiffusion Online?

Introduction

In the realm of AI-powered creativity, StreamMultiDiffusion emerges as a trailblazer, offering users the ability to generate images from text descriptions with remarkable speed and precision. This cutting-edge framework, developed by researchers from Seoul National University, combines the power of diffusion models with the flexibility of region-based text prompts. For those eager to harness this technology, this guide will provide a step-by-step walkthrough on how to use StreamMultiDiffusion online.

Understanding StreamMultiDiffusion

Before diving into the usage, it's essential to grasp StreamMultiDiffusion's core strengths. This framework excels in real-time interactive image generation, thanks to its semantic palette feature, which allows users to control image synthesis through multiple hand-drawn regions and corresponding text prompts. The framework's stream batch architecture and compatibility with fast inference techniques ensure a seamless and efficient experience.

Step-by-Step Guide

1. Visit the StreamMultiDiffusion Online Demo

First, open the StreamMultiDiffusion online demo.

2. Understand the User Interface

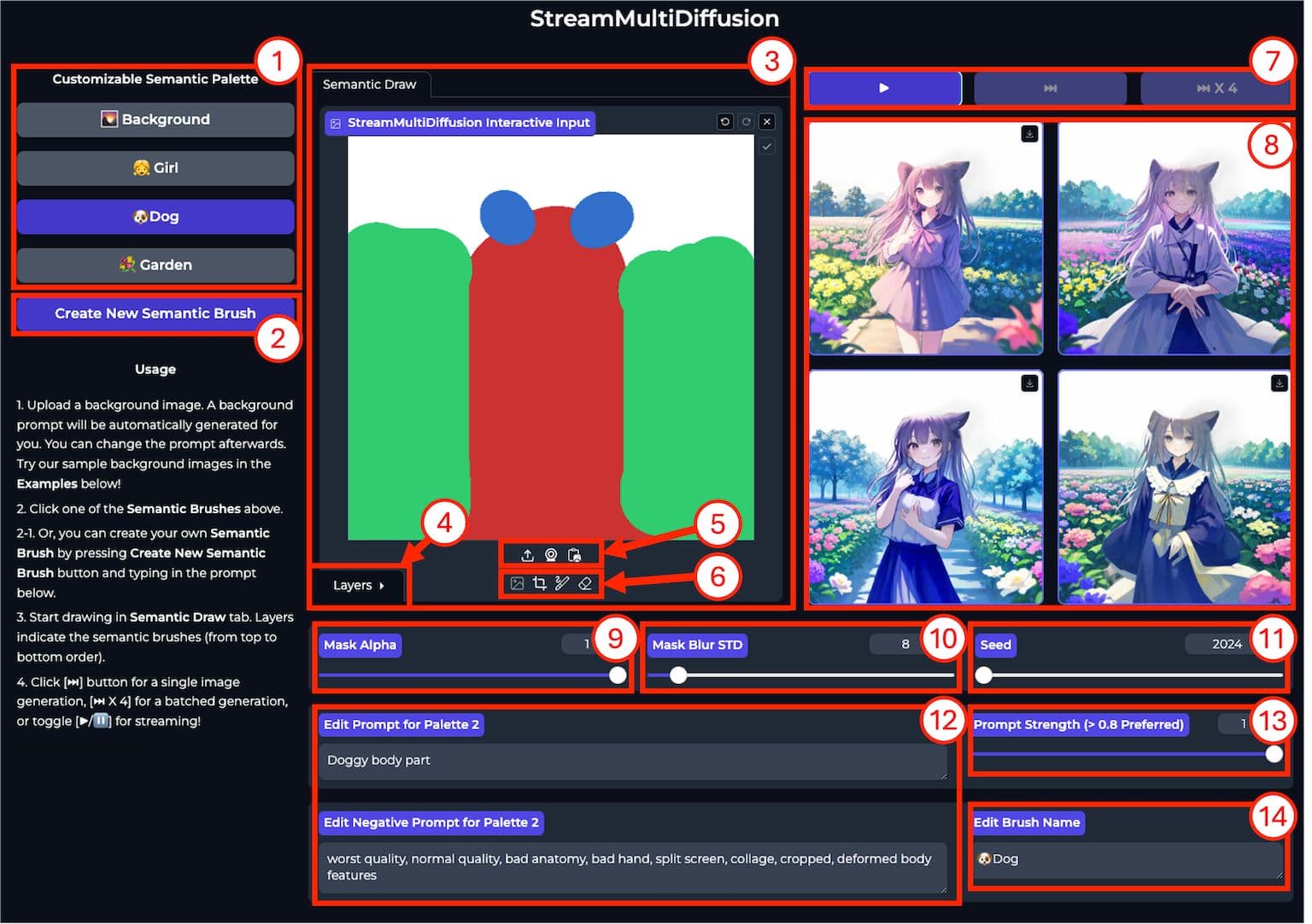

| No. | Component Name | Description |

|---|---|---|

| 1 | Semantic palette | Creates and manages text prompt-mask pairs, a.k.a., semantic brushes. |

| 2 | Create new semantic brush btn. | Creates a new text prompt-mask pair. |

| 3 | Main drawing pad | User draws at each semantic layer with a brush tool. |

| 4 | Layer selection | Each layer corresponds to each of the prompt masks in the semantic palette. |

| 5 | Background image upload | User uploads a background image to start drawing. |

| 6 | Drawing tools | Use brushes and erasers to interactively edit the prompt masks. |

| 7 | Play button | Switches between streaming/step-by-step mode. |

| 8 | Display | Generated images are streamed through this component. |

| 9 | Mask alpha control | Changes the mask alpha value before quantization. Controls local content blending (simply means that you can use nonbinary masks for fine-grained controls), but extremely sensitive. Recommended: >0.95 |

| 10 | Mask blur std. dev. control | Changes the standard deviation of the quantized mask of the current semantic brush. Less sensitive than mask alpha control. |

| 11 | Seed control | Changes the seed of the application. May not be needed, since we generate an infinite stream of images. |

| 12 | Prompt edit | User can interactively change the positive/negative prompts as needed. |

| 13 | Prompt strength control | Prompt embedding mix ratio between the current & the background. Helps global content blending. Recommended: >0.75 |

| 14 | Brush name edit | Adds convenience by changing the name of the brush. Does not affect the generation. Just for preference. |

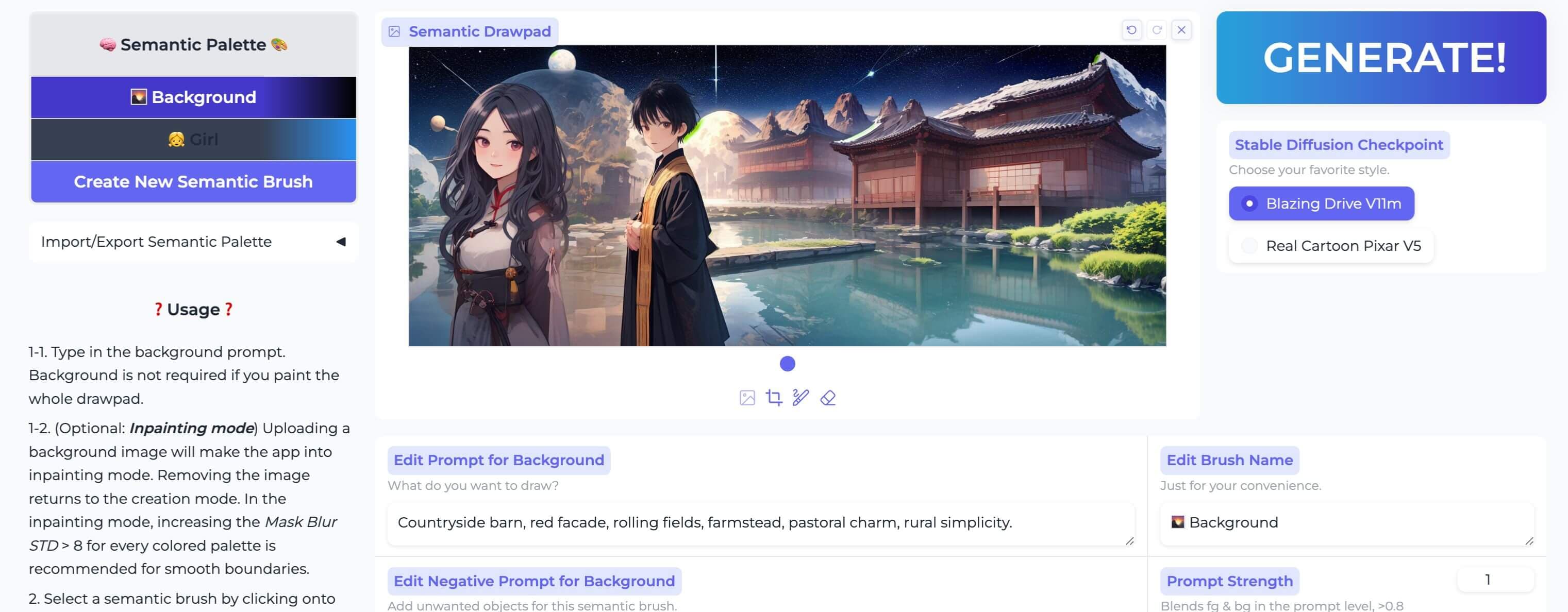

3. Upload a Background Image

Click on the area for uploading the image that needs modification, which is annotated as point 5 in the user interface above.

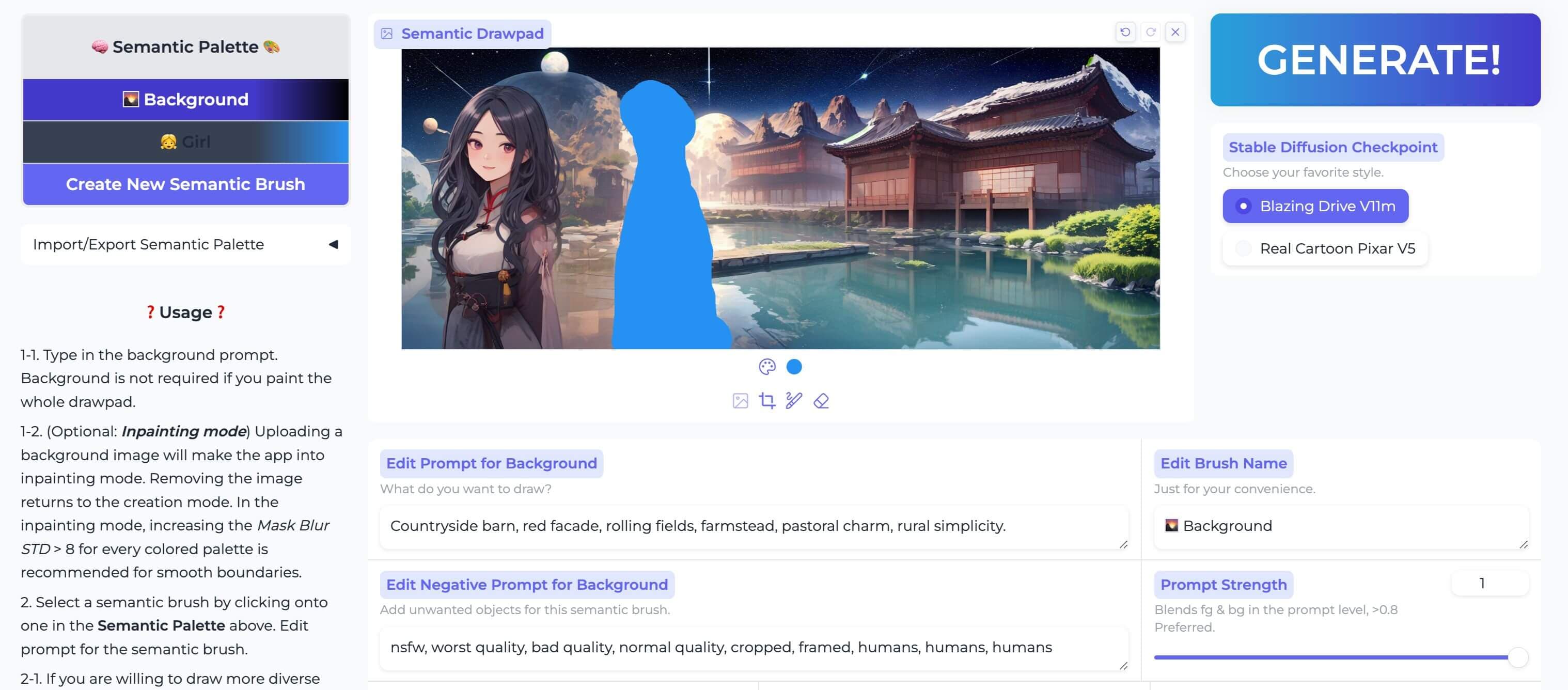

Then, begin using the brush to apply modifications to the area that needs to be edited:

It's important to note that the color you paint with should match the color on the left semantic palette. The first semantic palette defaults to blue.

4. Create Semantic Brushes

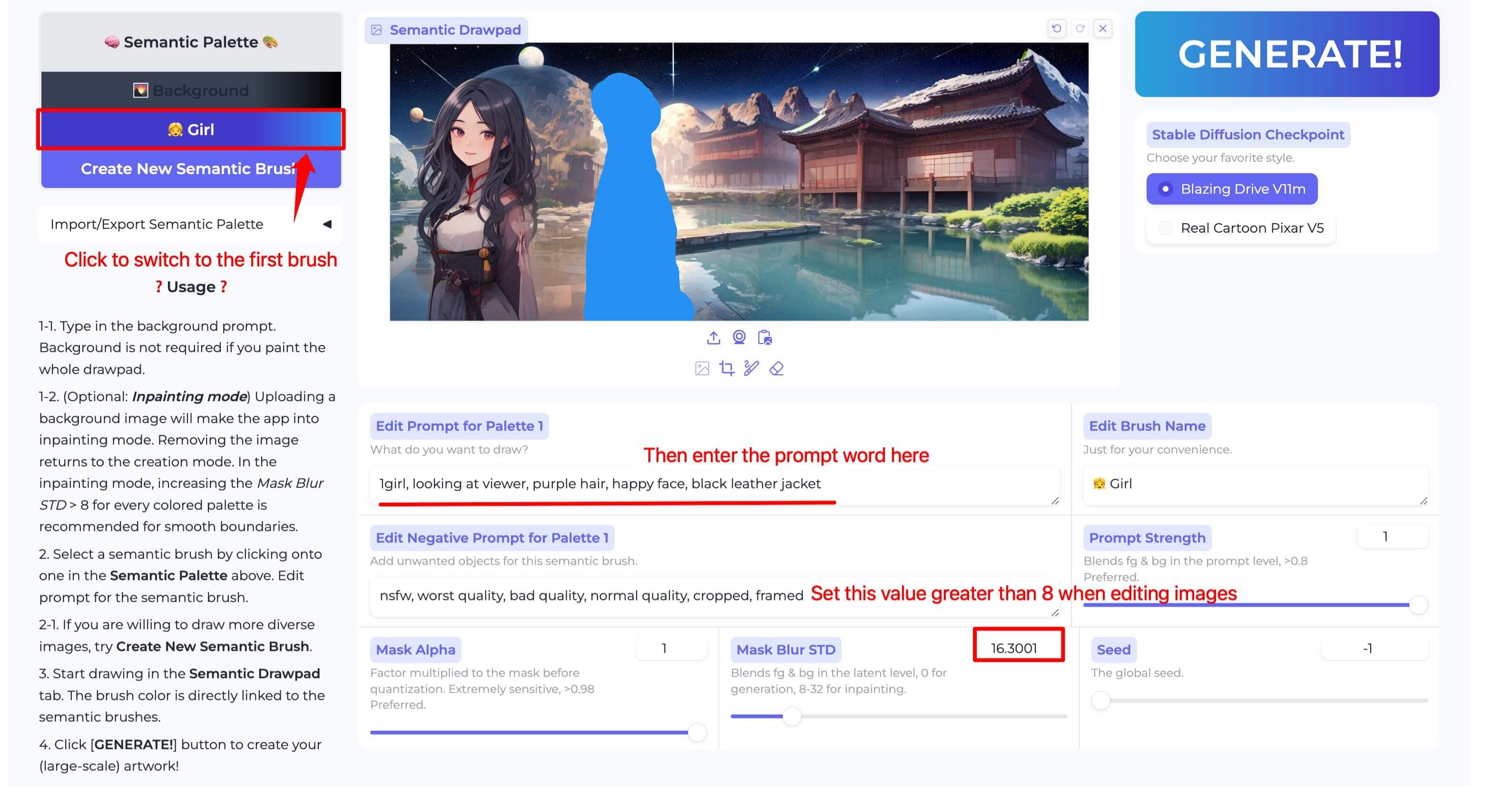

One semantic palette is already created by default. We need to switch to it and modify the prompts and Mask Blur STD.

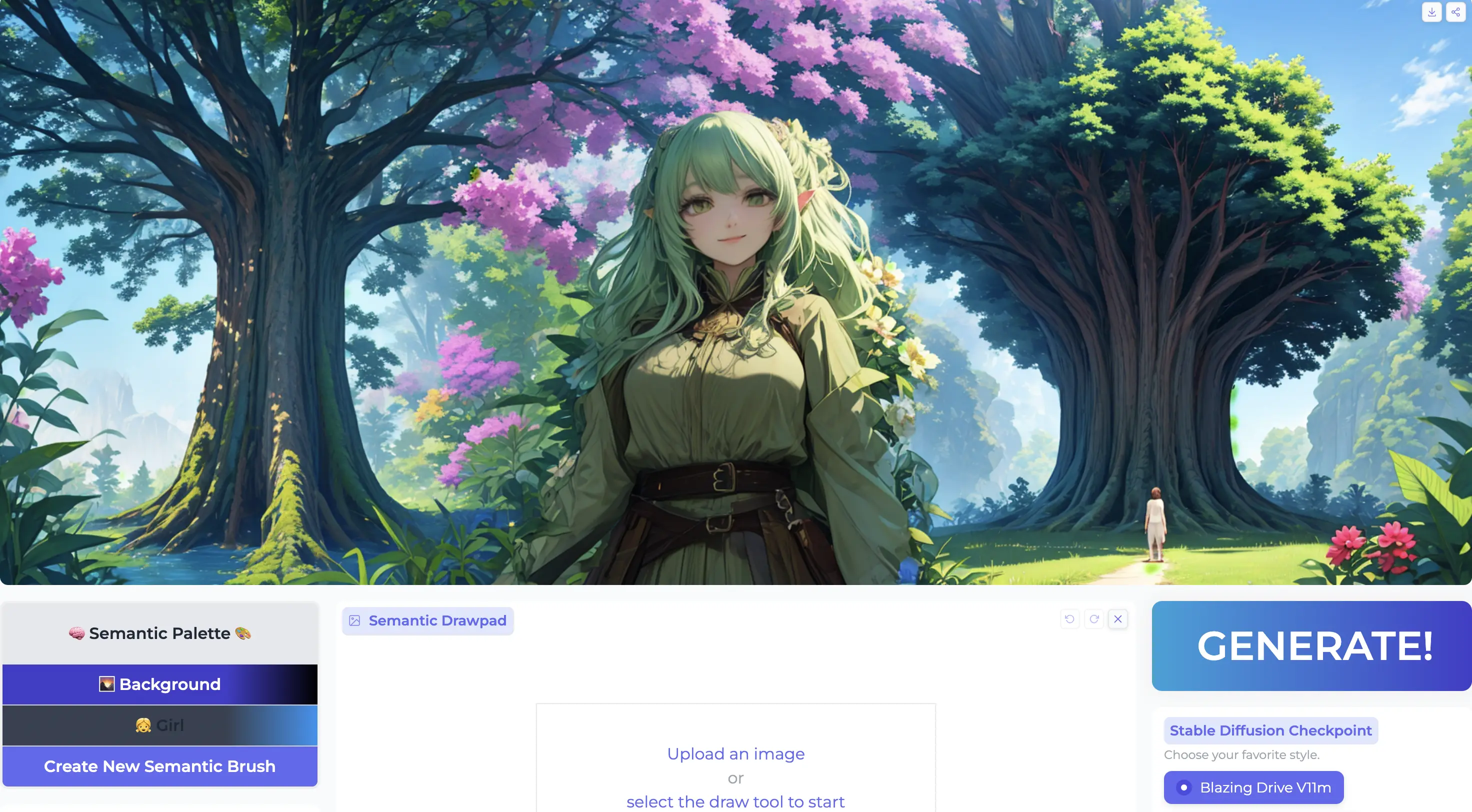

5. Start Drawing

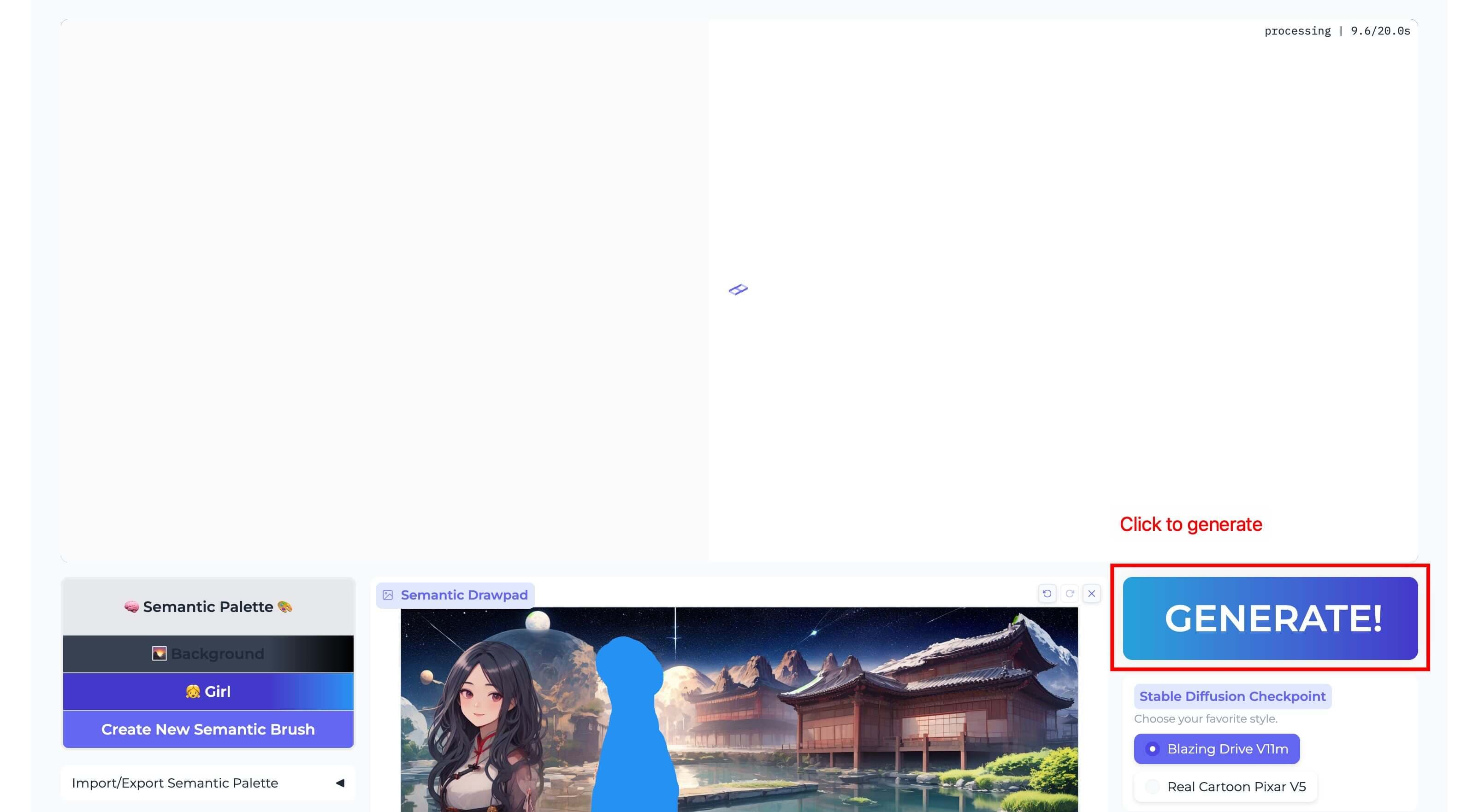

Click the "GENERATE" button to start painting.

6. View the Result

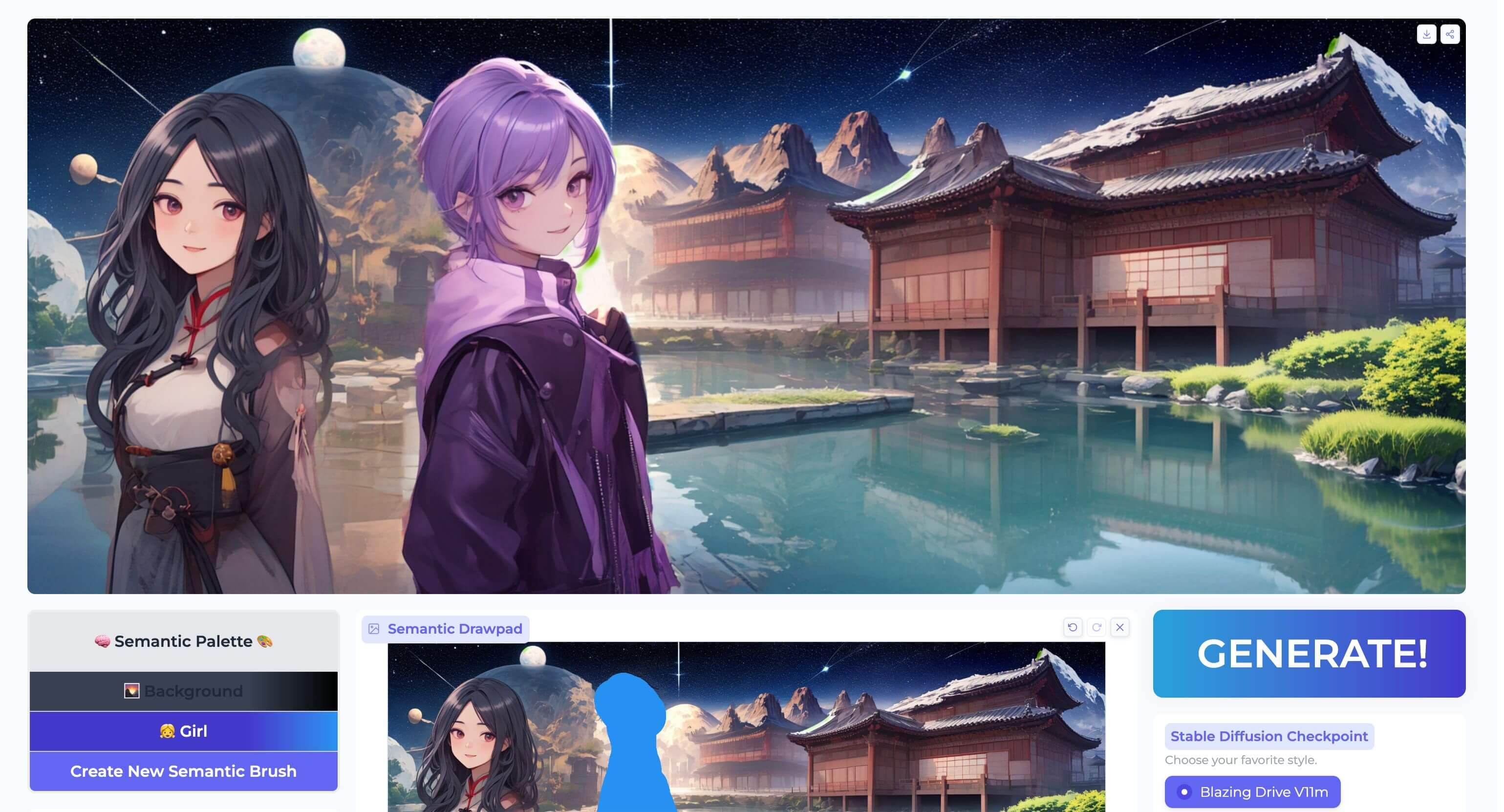

After waiting for approximately 30 seconds, you will see the modified result.

The above steps represent the simplest way of using the application. You can also create multiple semantic palettes and upload a blank image to create images from scratch, which will be covered next.

Conclusion

StreamMultiDiffusion represents a significant advancement in the accessibility and usability of AI-driven image generation. By following these steps, users can create unique and personalized images with unprecedented speed and control using this framework. With technology continually advancing, the potential applications of StreamMultiDiffusion are limitless, providing a glimpse into the creative expression of the digital age.

For those ready to embark on this creative journey, the StreamMultiDiffusion GitHub repository is the starting point. With a little technical knowledge and imagination, users can transform their textual and visual ideas into stunning AI-generated images, all at their fingertips.

- Authors

- Name

- Ethan Sunray

- @ethansunray